Originally posted on PhysiologicalComputing.net.

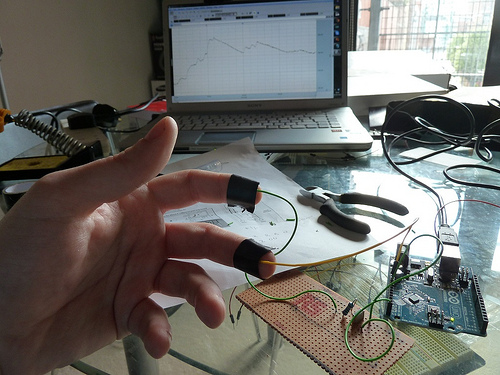

Recently I’ve been developing mechanics for a range of biofeedback projects, one of which was featured, over the summer, in an art exhibit at FACT Liverpool. These projects have been developed with the general public in mind, and so I’ve been working with consumer electronics rather than the research grade devices I normally use.

I like working with consumer electronics, chiefly, because they tend to of gone through some form of quality assurance (QA). As research sensors are highly specialised, and the potential customer base rather small, QA testing can unfortunately fall by the wayside. Subsequently a lot of devices can feel like an early alpha build; very rough round the edges and liable to crash at a moment’s notice. Much to the chagrin of the customer, they will be responsible for performing their own impromptu QA testing before they can start using the sensor for research (e.g. I’ve had several sensors I’ve had to return having failed the basic, “does the on button work” test).

While you never know if a research grade device is going to actually work, at least for the most part they describe how their supposed to work. Consumer electronics tend to lack this level of transparency and don’t describe how they process the data they collect. This is somewhat understandable; companies have IP to protect regarding their product, if they provided the data processing routines for their products anyone could replicate them and compete with them (or if you were so inclined validate those routines).

Though to be honest a lot of the IP their protecting is already widely published as there is an academic market for physiological processing routines and there is a lot of commonality between workflows (e.g. sample signal, filter signal, feature detect, correct error). And sometimes IP protection can be ridiculously over the top as well, for example I once asked a company about the time window being used for an average the sensor was providing and sadly that was propriety knowledge.

Propriety measures only really become a problem when they exist in a vacuum. For example, as Steve recently mentioned, the psychological constructs measured by the Emotiv, seem to be linked to contradictory states. As there is no description of how these measures are derived it is impossible to validate them. For the most part you can work around such measures, for example many heartbeat rate monitors provide a measure of average heartbeat rate along with the individual beats it detected. While the average measure tends to be propriety, as you have the feature data derived from the raw ECG signal you could easily enough create your own average (you could try identifying the average epoch from the feature data, however you’ll often find error correction is also being performed on the measure which prevents this, which again is propriety). As to why you would want to work around a propriety measure, even a simplistic one, well that depends on what you’re using it for but my point would be if you don’t have access to the processing workflow behind a measure then there’s no guarantee it’s detecting what it says it’s detecting.

In my experience, transparency is associated with research grade devices and QA with commercial electronics. Its an unfortunate trade-off, as having to perform QA as a researcher is not the most exciting of activities. Commercial sensors are improving with respect to transparency, especially as the price of sensor hardware drops and more sensors are developed. However the market for new types of research sensors isn’t going to go away and so some unfortunate sole is going to be spend their nights in the lab stuck doing QA.